Sub-sections:

1: vSphere 5 Compute Configuration Maximums

2: vSphere 5 Memory Configuration Maximums

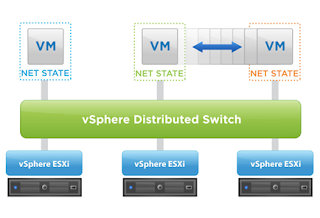

3: vSphere 5 Networking Configuration Maximums

4: vSphere 5 Orchestrator Configuration Maximums

5: vSphere 5 Storage Configuration Maximums

6: vSphere 5 Update Manager Configuration Maximums

7: vSphere 5 vCenter Server, and Cluster and Resource Pool Configuration Maximums

8: vSphere 5 Virtual Machine Configuration Maximums

1: vSphere 5 Compute Configuration Maximums

1 = Maximum amount of virtual CPU's per Fault Tolerance protected virtual machine

4 = Maximum Fault Tolerance protected virtual machines per ESXi host

16 = Maximum amount of virtual disks per Fault Tolerance protected virtual machine

25 = Maximum virtual CPU's per core

160 = Maximum logical CPU's per host

512 = Maximum virtual machines per host

2048 = Maximum virtual CPU's per host

64GB = Maximum amount of RAM per Fault Tolerance protected virtual machine

2: vSphere 5 Memory Configuration Maximums

1 = Maximum number of swap files per virtual machine

1TB = Maximum swap file size

2TB = Maximum RAM per host

3: vSphere 5 Networking Configuration Maximums

2 = Maximum forcedeth 1Gb Ethernet ports (NVIDIA) per host

2 = Maximum VMDirectPath PCI/PCIe devices per virtual machine

4 = Maximum concurrent vMotion operations per host (1Gb/s network)

8 = Maximum concurrent vMotion operations per host (10Gb/s network)

8 = Maximum VMDirectPath PCI/PCIe devices per host

8 = Maximum nx_nic 10Gb Ethernet ports (NetXen) per host

8 = Maximum ixgbe 10Gb Ethernet ports (Intel) per host

8 = Maximum be2net 10Gb Ethernet ports (Emulex) per host

8 = Maximum bnx2x 10Gb Ethernet ports (Broadcom) per host

16 = Maximum bnx2 1Gb Ethernet ports (Broadcom) per host

16 = Maximum igb 1Gb Ethernet ports (Intel) per host

24 = Maximum e1000e 1Gb Ethernet ports (Intel PCI-e) per host

32 = Maximum tg3 1Gb Ethernet ports (Broadcom) per host

32 = Maximum e1000 1Gb Ethernet ports (Intel PCI-x) per host

32 = Maximum distributed switches (VDS) per vCenter

256 = Maximum Port Groups per Standard Switch (VSS)

256 = Maximum ephemeral port groups per vCenter

350 = Maximum hosts per VDS

1016 = Maximum active ports per host (VSS and VDS ports)

4088 = Maximum virtual network switch creation ports per standard switch (VSS)

4096 = Maximum total virtual network switch ports per host (VSS and VDS ports)

5000 = Maximum static port groups per vCenter

30000 = Maximum distributed virtual network switch ports per vCenter

6x10Gb + 4x1Gb = Maximum combination of 10Gb and 1Gb Ethernet ports per host

4: vSphere 5 Orchestrator Configuration Maximums

10 = Maximum vCenter server systems connect to vCenter Orchestrator

100 = Maximum hosts connect to vCenter Orchestrator

150 = Maximum concurrent running workflows

15000 = Maximum virtual machines connect to vCenter Orchestrator

5: vSphere 5 Storage Configuration Maximums

2 = Maximum concurrent Storage vMotion operations per host

4 = Maximum Qlogic 1Gb iSCSI HBA initiator ports per server

4 = Maximum Broadcom 1Gb iSCSI HBA initiator ports per server

4 = Maximum Broadcom 10Gb iSCSI HBA initiator ports per server

4 = Maximum software FCoE adapters

8 = Maximum non-vMotion provisioning operations per host

8 = Maximum concurrent Storage vMotion operations per datastore

8 = Maximum number of paths to a LUN (software iSCSI and hardware iSCSI)

8 = Maximum NICs that can be associated or port bound with the software iSCSI stack per server

8 = Maximum number of FC HBA's of any type

10 = Maximum VASA (vSphere storage APIs – Storage Awareness) storage providers

16 = Maximum FC HBA ports

32 = Maximum number of paths to a FC LUN

32 = Maximum datastores per datastore cluster

62 = Maximum Qlogic iSCSI: static targets per adapter port

64 = Maximum Qlogic iSCSI: dynamic targets per adapter port

64 = Maximum hosts per VMFS volume

64 = Maximum Broadcom 10Gb iSCSI dynamic targets per adapter port

128 = Maximum Broadcom 10Gb iSCSI static targets per adapter port

128 = Maximum concurrent vMotion operations per datastore

255 = Maximum FC LUN Ids

256 = Maximum VMFS volumes per host

256 = Maximum datastores per vCenter

256 = Maximum targets per FC HBA

256 = Maximum iSCSI LUNs per host

256 = Maximum FC LUNs per host

256 = Maximum NFS mounts per host

265 = Maximum software iSCSI targets

1024 = Maximum number of total iSCSI paths on a server

1024 = Maximum number of total FC paths on a server

2048 = Maximum Powered-On virtual machines per VMFS volume

2048 = Maximum virtual disks per host

9000 = Maximum virtual disks per datastore cluster

30'720 = Maximum files per VMFS-3 volume

130'690 = Maximum files per VMFS-5 volume

1MB = Maximum VMFS-5 block size (non upgraded VMFS-3 volume)

8MB = Maximum VMFS-3 block size

256GB = Maximum file size (1MB VMFS-3 block size)

512GB = Maximum file size (2MB VMFS-3 block size)

1TB = Maximum file (4MB VMFS-3 block size)

2TB – 512 bytes = Maximum file size (8MB VMFS-3 block size)

2TB – 512 bytes = Maximum VMFS-3 RDM size

2TB – 512 bytes = Maximum VMFS-5 RDM size (virtual compatibility)

64TB = Maximum VMFS-3 volume size

64TB = Maximum FC LUN size

64TB = Maximum VMFS-5 RDM size (physical compatibility)

64TB = Maximum VMFS-5 volume size

6: vSphere 5 Update Manager Configuration Maximums

1 = Maximum ESXi host upgrades per cluster

24 = Maximum VMware tools upgrades per ESXi host

24 = Maximum virtual machines hardware upgrades per host

70 = Maximum VUM Cisco VDS updates and deployments

71 = Maximum ESXi host remediations per VUM server

71 = Maximum ESXi host upgrades per VUM server

75 = Maximum virtual machines hardware scans per VUM server

75 = Maximum virtual machine hardware upgrades per VUM server

75 = Maximum VMware Tools scans per VUM server

75 = Maximum VMware Tools upgrades per VUM server

75 = Maximum ESXi host scans per VUM server

90 = Maximum VMware Tools scans per ESXi host

90 = Maximum virtual machines hardware scans per host

1000 = Maximum VUM host scans in a single vCenter server

10000 = Maximum VUM virtual machines scans in a single vCenter server

7: vSphere 5 vCenter Server, and Cluster and Resource Pool Configuration Maximums

100% = Maximum failover as percentage of cluster

8 = Maximum resource pool tree depth

32 = Maximum concurrent host HA failover

32 = Maximum hosts per cluster

512 = Maximum virtual machines per host

1024 = Maximum children per resource pool

1600 = Maximum resource pool per host

1600 = Maximum resource pool per cluster

3000 = Maximum virtual machines per cluster

8: vSphere 5 Virtual Machine Configuration Maximums

1 = Maximum IDE controllers per virtual machine

1 = Maximum USB 3.0 devices per virtual machine

1 = Maximum USB controllers per virtual machine

1 = Maximum Floppy controllers per virtual machine

2 = Maximum Floppy devices per virtual machine

3 = Maximum Parallel ports per virtual machine

4 = Maximum IDE devices per virtual machine

4 = Maximum Virtual SCSI adapters per virtual machine

4 = Maximum Serial ports per virtual machine

4 = Maximum VMDirectPath PCI/PCIe devices per virtual machine

6 (if 2 of them are Teradici devices) = Maximum VMDirectPath PCI/PCIe devices per virtual machine

10 = Maximum Virtual NICs per virtual machine

15 = Maximum Virtual SCSI targets per virtual SCSI adapter

20 = Maximum xHCI USB controllers per virtual machine

20 = Maximum USB device connected to a virtual machine

32 = Maximum Virtual CPUs per virtual machine (Virtual SMP)

40 = Maximum concurrent remote console connections to a virtual machine

60 = Maximum Virtual SCSI targets per virtual machine

60 = Maximum Virtual Disks per virtual machine (PVSCSI)

128MB = Maximum Video memory per virtual machine

1TB = Maximum Virtual Machine swap file size

1TB = Maximum RAM per virtual machine

2TB – 512B = Maximum virtual machine Disk Size