VMware Cloud Foundation 5.1

VMware Cloud Foundation 5.1* delivering key enhancements across storage, networking, compute and lifecycle management to enable customers to scale their private cloud environments and improve resiliency.

VCF Support for vSAN Express Storage Architecture (ESA)VCF 5.1 is enhanced support for NVMe storage platforms with new support for vSAN Express Storage Architecture (ESA) that enables customers to deploy next generation servers that deliver higher performance, more scalability and improved efficiency. By co-existing with vSAN Original Storage Architecture (OSA), vSAN ESA is an architecture designed to achieve all-new levels of efficiency, scalability, and performance optimized to exploit the full potential of the very latest in hardware to unlock new capabilities for VCF customers.

Networking and Security Enhancements

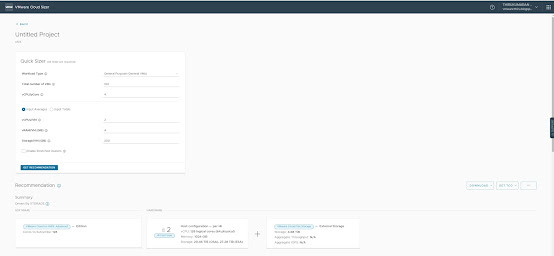

The VCF 5.1 released contains several enhancements which simplify the configuration of advanced networking and security. The most impactful change is the improved SDDC Manager workflows, which allow administrators to configure new workload domains and clusters with multiple physical network adapters and multiple virtual distributed switches prepared for NSX.

Other networking enhancements have been made which further leverage NSX, with a simplified and compliant topology for stretched clusters configured for vSAN OSA, and the ability to configure edge clusters without 2-tier routing.

These fine-tuned networking enhancements allow Administrators to deliver highly performant networking and security topologies which can be easily scaled and lifecycle managed.

Key enhancements available with VMware Cloud Foundation 5.1 include:

- VMware Aria Suite Lifecycle Cloud Management integration.

- Lifecycle management updates, including asynchronous prechecks and support for vSphere Lifecycle Manager images in VCF management domains.

- Numerous networking enhancements for vSAN Stretch clusters, NSX Edge clusters and enhanced SDDC Manager workflows.

- The VMware Identity Broker service allows Administrators to connect to third party/external identity providers (IDPs) for handling and processing identities, credentials and authentication (including multifactor authentication). OKTA is now supported as a 3rd party VMware identity broker in VMware Cloud Foundation environments.

- New Terraform provider for VMware Cloud Foundation that enables the ability to use Infrastructure as code to deploy, operate and manage VMware Cloud Foundation with machine-readable definition files to achieve a specific desired state.

Accelerate Data Driven Innovation in VMware Cloud Foundation

VMware Cloud Foundation from the release of the next generation of VMware Data Services Manager, as well as partnerships and Tech Previews with Google Cloud and MinIO, all of which will help customers accelerate their data-driven innovation.

VMware Live Recovery

VMware Live Recovery, a new solution that provides protection against ransomware as well as disaster recovery across VMware Cloud in one unified console. VMware Live Recovery is designed to help organizations protect their VMware-based applications and data from a wide variety of threats, including ransomware attacks, infrastructure failure, human error, and more. By bringing together the functions of established products VMware Site Recovery Manager and VMware Cloud Disaster Recovery with Ransomware Recovery – and combining them under a unified, flexible, and SaaS based console – customers can realize comprehensive enterprise protection within a single solution.

I hope this has been informative and thank you for reading!